Problem definition

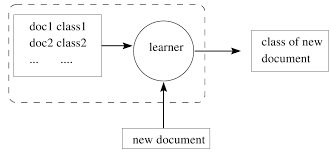

During our process of building a Recommender System, we had to face a text classifier problem. The problem could be understood as: “when a user is interested in specific paragraph (for example, highlight a paragraph or even share it), how could we detect the category of the paragraph”.

One application of this problem is:

- We have Eva.vn data which consists of ~6500 URLs. Our parsers can parse keywords, content, … from each URL.

- We have the category set of Eva.vn

- Given a single random URL, predict its category based on the data that we have (the predicted category must belong to Eva’s category set).

In this article, we will show you a pretty simple way to tackle this problem.

Naive Bayes for text classification

Let’s consider our problem in a different way.

- We have a set of $latex C = \left \{ c_{1}, c_{2}, …, c_{k} \right \}$categories. All of them are uniformly distributed.

- We have a set of $latex D = \left \{ d_{1}, d_{2}, …, d_{m} \right \}$ documents, each document is assigned to a specific category.

- Each document contains at set of words which is a subset of the universe: $latex W = \left \{ w_{1}, w_{2}, …, w_{n} \right \}$

If our input is a set of words $latex {W}’ = \left \{ w_{1}, w_{2}, …, w_{t} \right \}$, we have to find the most suitable category c for the input.

Simply by counting, we achieved the number of appearances of a word $latex w_{i}$ in each category. Going just a little deeper, we can calculate the probability of word $latex w_{i}$given category $latex c_{j}$. Or in a mathematical way, we could calculate $latex P(w_i, c_j)$.

Applying the Naive Bayes rule we have:

$latex P(c_j | w_1, w_2, …, w_t) \sim P(c_j)P(w_1|c_j)P(w_1|c_j) … P(w_t|c_j)$

The Bayes classifier will work like this:

$latex c = argmax(P(c_j|w_1, w_2, …, w_t))$

For a long context input, we might not be able to compute $latex P(c_j | w_1, w_2, …, w_t)$ because $latex P(w_i|c_j) < 1$ and multiplying t words may result in 0. To solve it, we often calculate:

$latex log(P(c_j | w_1, w_2, …, w_t)) = log(P(c_j)) + \sum log(P(w_i|c_j))$

Another problem is that: in real world data we may have to face the zero-problem (the number of appearance of $latex w_i$ in category $latex c_j$ is zero).

This leads to:

$latex P(w_i|c_j) = 0$

Hence, we will received:

$latex P(c_j|w_1, w_2, …, w_t) = 0$

To solve this problem, we could apply the additive smoothing method. Instead of normally calculating the occurrences of $latex w_i$ in category$latex c_j$ we add the smoothing value δ. The occurrence of$latex w_i$ in category$latex c_j$ will become:

$latex \frac{count(w_i \in c_j) + \sigma}{count(w \in c_j) + \sigma \times \left | W \right |}$

Real world data

To download Eva.vn data that we have crawled, visit this link

The input format is the following:

[code lang=”html”]

Version<tab>Timestamp<tab>url<tab>categories<tab>title<tab>imageLink<tab>keywords<tab>content

[/code]

The categories section describes the category tree of each url, they are separated by “/” character. The first category is the root category of the url. To simplify the problem, we only consider the root category.

In this example, we only consider the keywords as the parameter for classifier

Now, let’s shuffle our file and split it into two parts, one for training and another one for testing. Note that, the number of lines from the input file is 6456

[code lang=”bash”]

shuf -n 6456 keywords.txt > randomedkeywords.txt

head -n 1000 randomedkeywords.txt > validation.txt

tail -n 5456 randomedkeywords.txt > training.txt

[/code]

After this step, we received 3 files: randomedkeywords.txt, training.txt, and validation.txt. Thetrain.txt file will be used to train our classifier and the validation.txt will be used for testing our classifier.

Applying the aforementioned algorithm into the training.txt, we received the Naive Bayes classifier for Eva.vn data.

Testing result showed that the algorithm works quite well, yielding the precision of 0.815; a pretty impressive result for a simple algorithm.

Drawback and Future work

Naive Bayes classifier based on Naive Bayes assumption in which every word in the document is independent. However, in real life data, they are not. Each word in a sentence always appears for a reason, it may support other words as well. As the result, Naive Bayes cannot consider the semantics of the input.

Recent trends in Topic Modeling showed that LDA, LSA work quite well with this type of problem. In the future article, we will show you how to use LDA to solve our problem.

Another solution is using word2vec. By vectorizing the input text, we could use other classification algorithms such as SVM, Neural Network, … to classify our input data.